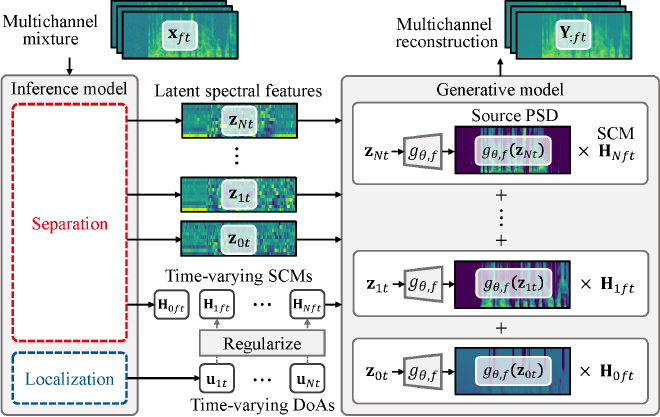

時変深層フルランク空間相関分析 (TV Neural FCA)

| Title | Joint Separation and Localization of Moving Sound Sources Based on Neural Full-Rank Spatial Covariance Analysis |

| Authors | Hokuto Munakata, Yoshiaki Bando, Ryu Takeda, Kazunori Komatani, Masaki Onishi |

| Journal | IEEE Signal Processing Letters (2023) |

| Contents | PDF (OA on IEEE Xplore) |

Separation results for real recordings #

We separated 6-channel mixture signals recorded in our experimental room with the neural network evaluated in the paper. The mixture signals were dereverberated by the weighted prediction error (WPE) method in advance.

Result 1: Separation of two static sources #

Src 1 (static): This is a demonstration of time-varying neural FCA.

Src 2 (static): これは時変深層フルランク空間相関分析のデモ動画です.

(Kore wa jihen sinsou furu-ranku kukan-soukan bunseki no demo douga desu)

Result 2: Separation of one static source and one moving source #

Src 1 (moving): This is a demonstration of time-varying neural FCA.

Src 2 (static): これは時変深層フルランク空間相関分析のデモ動画です.

(Kore wa jihen sinsou furu-ranku kukan-soukan bunseki no demo douga desu)

Result 3: Separation of two moving sources #

Src. 1 (moving)

Src. 2 (moving)

Simulated Mixtures #

Simulated trajectories provide additional insight into DOA-aware separation.

Scene 1: 053a050b vs 051o020g #

Src. 1

Src. 2

Scene 2: 22ha010r vs 22ga010e #

Src. 1

Src. 2

Scene 3: 444c0209 vs 445c0209 #

Src. 1

Src. 2